AI Concepts Made Easy: A Beginner's Manual (Part 5 of 5)

The Evolution of GPUs: From Gaming to Fueling the AI Revolution

In the world of technology, GPUs (Graphical Processing Units) have become the driving force of artificial intelligence - but they didn't start out that way. Originally built for rendering graphics and video in gaming, GPUs have evolved into powerful engines that’s fueling AI revolution. This rapid growth of the AI chips market is expected to reach $73.49 billion by 2025, growing at a compound annual growth rate (CAGR) of over 51% between 2021-20251

The AI chip transformation started in 2008 when Andrew Ng published a paper2 showing how graphics processing units (GPUs) could crunch data way faster than regular central processing units (CPUs).

GPUs were like a shortcut for heavy math problems. They handled parallel processing in a snap, doing multiple calculations at the same time. CPUs worked in sequence, one step after another - too slow for complex AI!

So Ng suggested souping up AI software with GPU power. These specialized chips were monsters for matrix math and neural networks. It was a total game changer!

Almost overnight, GPUs became every AI researcher's best friend. Nvidia3 made them even faster for AI. Newbie startups could now train smart AI algorithms without massive computing clusters.

Understanding GPU and CPU Differences:

Modern GPUs are crazy powerful - they can do trillions of math problems every single second! It's like having a ultra-fast calculator that works quicker than you can blink an eye.

This insane number-crunching ability makes GPUs perfectly suited for training artificial intelligence (AI) models called neural networks. Neural networks are complicated systems that mimic the human brain, with many layers of interconnected nodes.

Training these large-scale neural networks would be excruciatingly slow using traditional CPUs designed for sequential operations. Regular CPUs would take forever to train these brain-like networks. But GPUs gobble up all the math in a flash. They're optimally designed for the job!

In addition, GPUs excel at

- Parallel vs. Sequential Processing: GPUs excel at parallel processing, dividing tasks into smaller subtasks processed simultaneously, while CPUs handle tasks sequentially, one step at a time.

- Speed and Efficiency: GPUs can process information much faster than CPUs, estimated to be 10-50 times faster due to their parallel processing capabilities.

- Specialization: GPUs are essential for developing artificial intelligence (AI) due to their ability to handle specialized computations efficiently.

So next time you hear "GPU", think parallel processing, speed, and specialization for AI. That's what makes them crucial for developing today's artificial intelligence!45

The Connection Between Large Language Models (LLMs) and GPU Chips:

When LLMs need to learn and enhance their capabilities, they demand substantial computational power.

The GPU chip serves as a supercharged brain, aiding in training LLMs by processing vast amounts of data rapidly.

LLMs use specialized software like Nvidia's Compute Unified Device Architecture (CUDA) programming language introduced to communicate with the GPU chip.

CUDA acts as a unique language, enabling LLMs to instruct the GPU chip on how to expedite learning and language skill improvement.

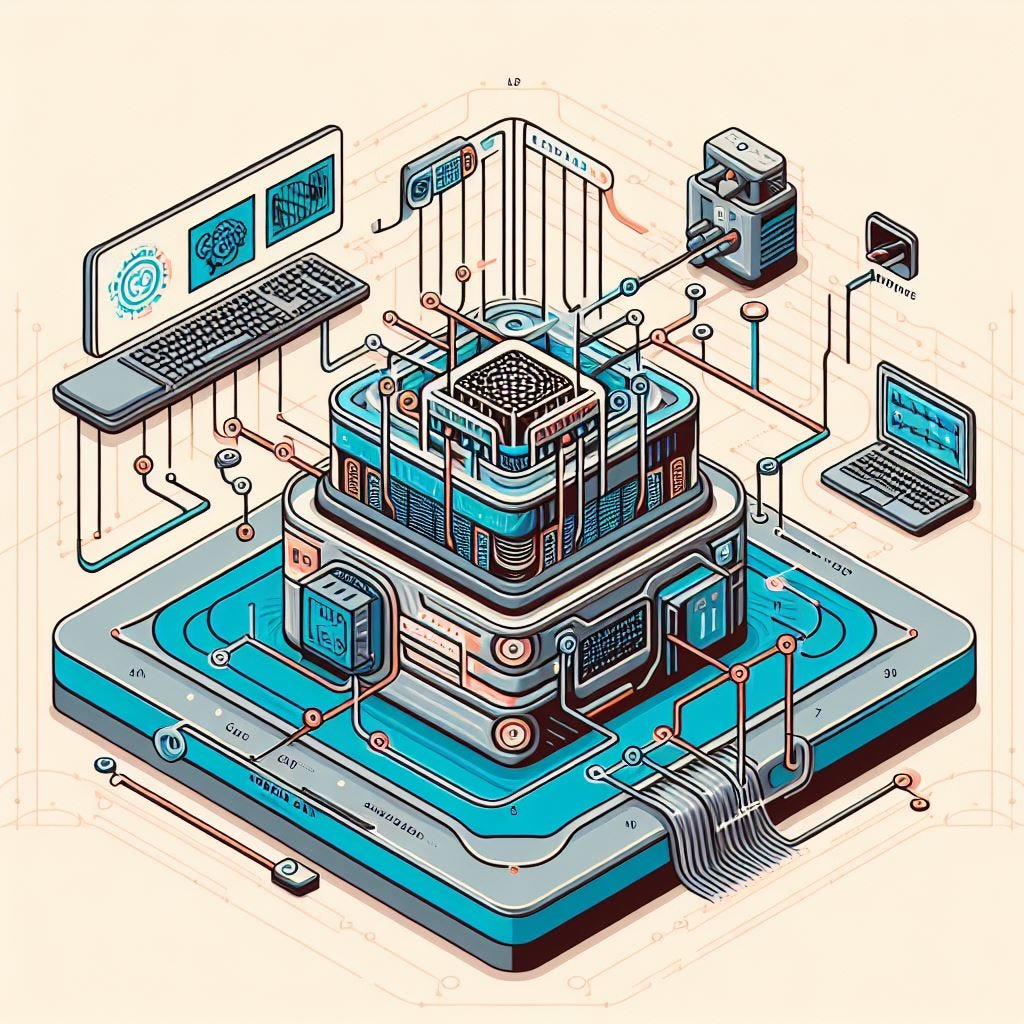

In the above diagram, envision the LLM (smart assistant) connected to the GPU chip (powerful engine) through CUDA (communication bridge). This connection empowers the LLM to learn and grow, leveraging the computational power of the GPU chip. This collaboration transforms LLMs into efficient language processors, similar to a smart assistant learning from a supercharged brain.

As AI continues to advance, companies like Nvidia, AMD, and Intel are inventing new GPUs specialized for AI applications. Microsoft is actively developing its own custom AI chips6 to power large language models like Bing Copilot. Cloud computing services such as AWS and Google Cloud offer GPU-based systems for AI research, enhancing computational power and efficiency.

Environmental Considerations:

Efficiency is crucial while designing AI systems. As AI grows, so does demand for chips and computing power. When designing AI systems,

Build energy efficient algorithms to optimize the energy efficiency usage of the GPU's.

Research and shift to light weight, efficient AI models like transformers instead of resource-intensive traditional deep-learning.

Promote green software engineering practices that consider carbon footprint and energy use along with functionality.

Use carbon offsets to mitigate environmental impact as cloud compute needs increase in the short term while transitioning data centers to renewable energy.

As we build more powerful AI, efficiency on GPUs will be critical - both for performance and environmental impact. Beyond GPUs, Neural Processing Units (NPUs), Language Processing Units (LPUs) and Tensor Processing Units (TPUs) serve as specialized hardware accelerators tailored for machine learning tasks. In the subsequent article of this series, we will explore further into the specifics of NPUs, LPUs and TPUs.

AI Tools To Boost Your Productivity This Week

Otter AI - This innovative AI meeting assistant boosts productivity by providing real-time transcription, automatic highlights, and suggested action items during meetings. Otter generates shareable, searchable notes, allowing you to easily find key information in transcripts. Its automated documentation saves time and effort.

Ramblefix - This groundbreaking AI writing tool allows you to speak freely instead of typing. It listens to your spoken words and transforms them into clear, concise written text using artificial intelligence. RambleFix tidies up disorganized thoughts into well-composed, polished writing.

Explore below for a quick recap of key AI concepts -

Introduction to AI & ML

Supervised & Unsupervised Learning with real world examples

Introduction to Deep Learning & Generative AI

Differences between Machine Learning, Deep Learning & Generative AI

Product Managers and AI can co-exit

LLMs, Challenges & Mitigation Strategies

Introduction to LLMs, Vectors, Tensors, Transformers with examples

The Future of LLMs

Challenges with LLMs and Potential Solutions

Reducing Hallucinations in LLMs

Why hallucinations occur in LLMs

Introduction to RAG

Use cases to improve Enterprise customer experience

GPUs and their Connection to LLMs

Evolution of GPUs from Gaming to AI

Difference between CPUs and GPUs

The Connection between LLMs and GPU Chips

Environmental Considerations for Efficient AI